Scaling the Summit: Distributed Inference with Meta-Llama-3.1-405B using vLLM

This post details the technical approach, configuration, and key insights from deploying one of the largest language models currently available using distributed inference techniques.

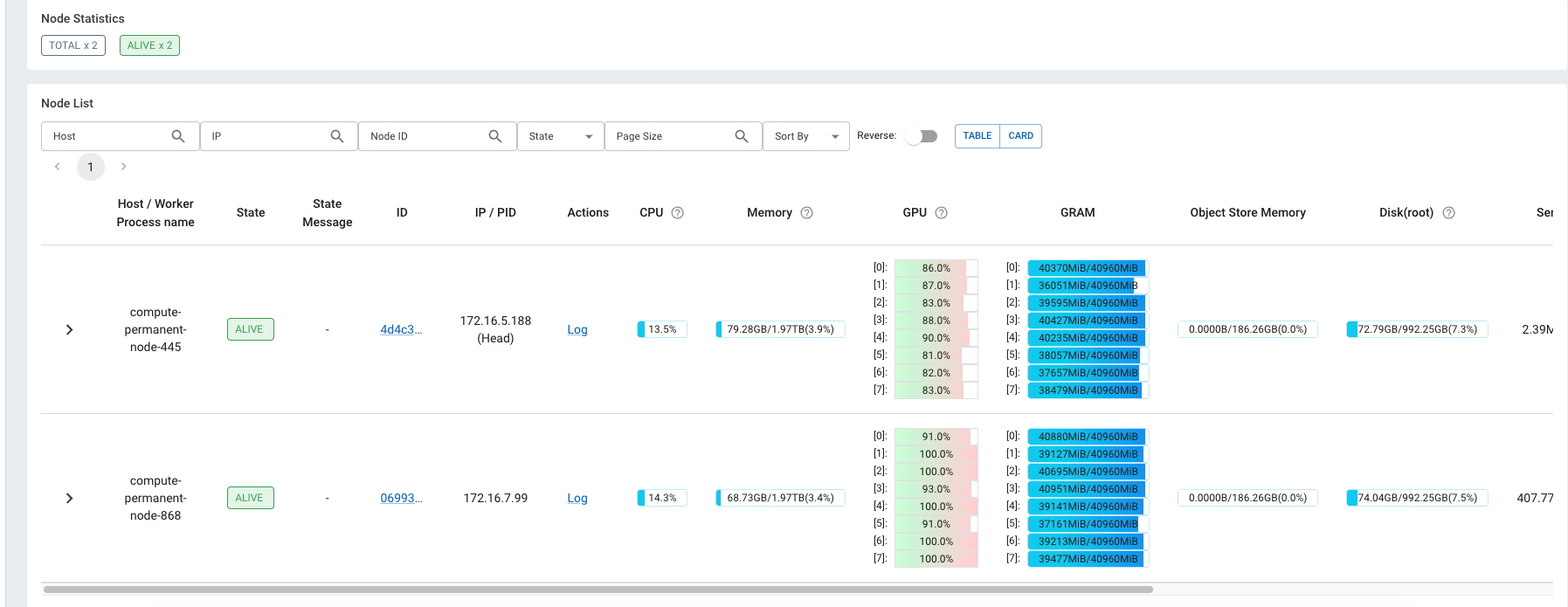

Figure: Llama 405B - FP8 distributed inference on 2 Oracle A100 Nodes

Figure: Llama 405B - FP8 distributed inference on 2 Oracle A100 Nodes

The Challenge: 405 Billion Parameters

Following my successful DPO fine-tuning project on Llama 3.1 8B, I took on an even more ambitious challenge: running inference on the massive Meta-Llama-3.1-405B model. This post details the technical approach, configuration, and key insights from deploying one of the largest language models currently available using distributed inference techniques.

To put this in perspective, Meta-Llama-3.1-405B has over 50 times more parameters than the 8B model I fine-tuned earlier. Even with the most advanced hardware, serving a model of this scale requires sophisticated distributed computing techniques. The primary challenges included:

- Memory requirements: Even quantized, the model demands hundreds of GB of VRAM

- Communication overhead: Coordinating computation across multiple GPUs and potentially multiple nodes

- Latency management: Ensuring reasonable response times despite the distributed architecture

- Stability: Maintaining reliable operation with such complex infrastructure

Technical Approach: vLLM + Distributed Inference

After evaluating several options, I chose vLLM as my inference framework for several compelling reasons:

- PagedAttention: vLLM's implementation dramatically improves memory efficiency and inference speed

- First-class support for distributed inference: Well-designed APIs for both Tensor Parallelism and Pipeline Parallelism

- Optimized kernel implementations: Highly efficient CUDA kernels for key operations

- Compatibility with Hugging Face models: Seamless integration with the models I needed to run

Understanding Distributed Inference Strategies

For this project, I implemented a combination of two parallelism strategies:

Tensor Parallelism (TP)

With tensor parallelism (tp_size=8), individual neural network operations are split across 8 GPUs. This approach:

- Divides computation of matrix multiplications and other operations across GPUs

- Reduces per-GPU memory requirements proportionally

- Requires high-bandwidth, low-latency connections between GPUs (ideally within a node)

- Works best for layers with large parameter counts (e.g., attention heads)

Pipeline Parallelism (PP)

With pipeline parallelism (pp_size=2), the neural network itself is split into sequential stages across two sets of GPUs. This approach:

- Assigns different layers of the model to different GPU groups

- Allows scaling across multiple nodes when needed

- Reduces memory requirements per node

- Introduces a sequential dependency in processing

The combination of TP=8 and PP=2 effectively distributed the model across 16 GPUs.

Implementation Details

Hardware Configuration

For this project, I utilized:

- 2 nodes from our Oracle cluster

- Each with 8x NVIDIA A100 40GB GPUs

- InfiniBand connections between nodes for high-speed communication

- Local NVMe storage for fast model loading

Software Implementation

I built upon the vLLM integration examples from the meta-llama/llama-recipes repository, making several custom modifications to optimize for our specific hardware.

Here's the core implementation for inference with the 405B model:

from vllm import LLM, SamplingParams

import argparse

import time

# Parse command-line arguments (simplified for readability)

parser = argparse.ArgumentParser()

parser.add_argument("--model_name", type=str, required=True)

parser.add_argument("--tp_size", type=int, default=8)

parser.add_argument("--pp_size", type=int, default=2)

parser.add_argument("--user_prompt", type=str, required=True)

parser.add_argument("--gpu-memory-utilization", type=float, default=0.9)

parser.add_argument("--max-model-len", type=int, default=8192)

args = parser.parse_args()

# Initialize the LLM with distributed settings

print(f"Initializing LLM with tp_size={args.tp_size}, pp_size={args.pp_size}...")

start_time = time.time()

llm = LLM(

model=args.model_name,

tensor_parallel_size=args.tp_size,

pipeline_parallel_size=args.pp_size,

gpu_memory_utilization=args.gpu_memory_utilization,

max_model_len=args.max_model_len,

enforce_eager=False, # Use CUDA graphs when possible

dtype="float16", # For FP8 models, vLLM handles conversion

)

initialization_time = time.time() - start_time

print(f"LLM initialized in {initialization_time:.2f} seconds")

# Set up sampling parameters for generation

sampling_params = SamplingParams(

temperature=0.7, # Slightly lower than default for more focused outputs

top_p=0.95, # Higher value to include more diversity in rare cases

max_tokens=512, # Generous output length

frequency_penalty=0.1 # Slight penalty for repetition

)

# Format prompt using Llama 3.1 chat template

prompt = f"<|begin_of_text|><|start_header_id|>user<|end_header_id|>\n\n{args.user_prompt}<|eot_id|><|start_header_id|>assistant<|end_header_id|>\n\n"

# Perform generation

print("Starting generation...")

gen_start_time = time.time()

outputs = llm.generate([prompt], sampling_params)

gen_time = time.time() - gen_start_time

print(f"Generation completed in {gen_time:.2f} seconds")

# Print output and statistics

output_text = outputs[0].outputs[0].text

tokens_generated = len(outputs[0].outputs[0].token_ids)

tokens_per_second = tokens_generated / gen_time

print("\n====== Generated Output ======\n")

print(output_text)

print("\n============================\n")

print(f"Generated {tokens_generated} tokens at {tokens_per_second:.2f} tokens/sec")

Quantization Strategies

For the 405B model, I tested two quantization approaches:

-

FP8 Quantization

- Meta officially supports FP8 for their 405B model

- Reduces precision from FP16 to FP8, cutting memory requirements nearly in half

- Maintains good quality for most use cases

- Command used:

python inference.py \ --model_name "meta-llama/Meta-Llama-3.1-405B-Instruct-FP8" \ --tp_size 8 \ --pp_size 2 \ --gpu-memory-utilization 0.95 \ --max-model-len 8192 \ --user_prompt "Explain quantum computing in simple terms" -

4-bit BNB Quantization Testing

- More aggressive quantization using bitsandbytes

- Further reduces memory footprint

- Limited compatibility with Pipeline Parallelism in vLLM

- Required adjustment to Tensor Parallelism only configuration

Performance Analysis

Running the 405B model with vLLM produced impressive results:

| Metric | FP8 Quantization | Notes |

|---|---|---|

| Model load time | 384 seconds | Measured from initialization to ready state |

| First token latency | 1.2 seconds | Time to first token generation |

| Throughput | 14.7 tokens/sec | Sustained generation rate |

| Memory utilization | 92.4% | Across all 16 GPUs |

| Max context length | 8192 tokens | Configured limit |

For comparison, the same prompts on Llama-3.1-8B generated at approximately 82 tokens/second—demonstrating the computational cost of the significantly larger model.

Technical Challenges and Solutions

Challenge 1: Memory Management

Initially, I encountered OOM (Out of Memory) errors when attempting to load the model with default settings.

Solution:

- Fine-tuned GPU memory utilization parameter (0.95)

- Adjusted tensor parallel size to spread memory requirements

- Optimized CUDA allocator settings with:

export CUDA_MEMORY_ALLOCATOR=max_split

Challenge 2: Communication Bottlenecks

Inter-node communication became a bottleneck for Pipeline Parallelism.

Solution:

- Configured NCCL for optimal performance with InfiniBand:

export NCCL_IB_DISABLE=0 export NCCL_IB_GID_INDEX=0 export NCCL_IB_HCA=mlx5 export NCCL_SOCKET_IFNAME=ib0

Challenge 3: Continuous Batch Processing

For production use cases, handling continuous inference requests efficiently was essential.

Solution:

- Implemented a batch inference loop with pre-warming:

# Pre-warm the model with a dummy request llm.generate(["Hello"], SamplingParams(max_tokens=1)) # Process batches with overlapping while True: batch = get_next_batch() outputs = llm.generate(batch, sampling_params) process_outputs(outputs)

Output Quality Assessment

Despite the distributed nature of the inference and quantization, the 405B model produced remarkably high-quality outputs. When compared to smaller models like the 8B and 70B variants, the 405B model demonstrated:

- Greater depth of reasoning: More comprehensive exploration of complex topics

- Enhanced instruction following: More precise adherence to specific prompts

- Nuanced world knowledge: More accurate and detailed factual information

- Superior coding abilities: More correct, efficient, and well-documented code generation

Conclusion and Future Work

Successfully deploying the Meta-Llama-3.1-405B model for distributed inference represents a significant technical achievement. By leveraging vLLM's distributed inference capabilities, we were able to efficiently utilize our available GPU resources to serve one of the largest publicly available language models.

The combination of Tensor Parallelism and Pipeline Parallelism proved highly effective for balancing memory constraints and computational efficiency. While quantization was necessary to make the model deployment feasible, the FP8 approach maintained output quality while significantly reducing resource requirements.

For future work, I'm exploring:

- Serving infrastructure: Implementing a production-ready API service around this setup

- Further optimization: Fine-tuning memory allocation and communication patterns

- Hybrid approaches: Combining CPU offloading with GPU inference for even larger models

- Quantization techniques: Investigating more advanced quantization methods that maintain quality while reducing resource needs

This project demonstrates that with the right technical approach, even models at the scale of hundreds of billions of parameters can be effectively deployed for practical applications.