DeepSeek-V3: A Technical Deep Dive into the Innovations Reshaping Open-Source LLMs

DeepSeek-V3 represents a monumental achievement in open-source language models, delivering GPT-4 level performance at a fraction of the training cost. This 671B parameter Mixture-of-Experts (MoE) model, with only 37B parameters activated per token, introduces several groundbreaking innovations that deserve careful technical examination.

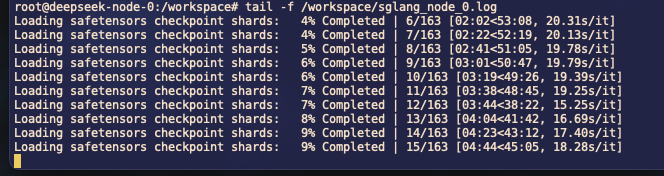

Figure: Deepseek SGLang Inference Server

1. Revolutionary Load Balancing: The Auxiliary-Loss-Free Strategy

The Problem with Traditional MoE Load Balancing

Traditional MoE models face a fundamental dilemma: they need auxiliary losses to prevent routing collapse (where all tokens get sent to the same few experts), but these losses degrade model performance. Too large an auxiliary loss impairs the model's ability to learn optimal routing patterns.

DeepSeek-V3's Innovation: Bias-Based Dynamic Balancing

DeepSeek-V3 pioneers an auxiliary-loss-free load balancing strategy that introduces a bias term b_i for each expert:

g'_i,t = { s_i,t, if s_i,t + b_i ∈ TopK({s_j,t + b_j | 1 ≤ j ≤ N_r}, K_r) 0, otherwise }

Key insights:

- The bias term is used only for routing decisions, not for computing gating values

- Biases are dynamically adjusted: decreased by γ when an expert is overloaded, increased when underloaded

- This allows experts to specialize in different domains while maintaining overall balance

Empirical Results

The auxiliary-loss-free strategy shows consistent improvements:

- Small scale (15.7B model): Improved from 2.258 to 2.253 validation loss

- Large scale (228.7B model): Better performance on 9 out of 10 benchmarks

- Enables greater expert specialization patterns across different data domains

2. Multi-Token Prediction (MTP): Thinking Ahead

Architecture Design

Unlike traditional next-token prediction, DeepSeek-V3 predicts multiple future tokens using sequential MTP modules. Each module:

-

Shares embedding layers and output heads with the main model

-

Maintains complete causal chains for each prediction depth

-

Combines previous representations with future token embeddings via projection matrices

h'_k_i = M_k[RMSNorm(h^(k-1)i); RMSNorm(Emb(t(i+k)))]

Training Benefits

The MTP strategy provides:

- Denser training signals: Multiple prediction points per position

- Better representation learning: Forces the model to "pre-plan" for future tokens

- Improved benchmark performance: Consistent gains across evaluation metrics

Inference Advantages

During inference:

- MTP modules can be discarded for standard generation

- Or repurposed for speculative decoding, achieving 1.8x speedup

- Second token acceptance rate: 85-90% across various generation topics

3. FP8 Training at Scale: Breaking New Ground

The Technical Challenge

Training in FP8 faces critical obstacles:

- Limited dynamic range with reduced exponent bits

- Activation outliers causing quantization errors

- Accumulation precision limited to ~14 bits on H800 GPUs

DeepSeek-V3's Multi-Pronged Solution

Fine-Grained Quantization

- Activations: 1×128 tile-wise quantization (per token, per 128 channels)

- Weights: 128×128 block-wise quantization

- Key insight: Smaller quantization groups better handle outliers

High-Precision Accumulation

DeepSeek-V3 implements promotion to CUDA cores at intervals:

Every N_C = 128 elements:

- Complete MMA on Tensor Cores

- Copy partial results to FP32 registers

- Perform full-precision accumulation on CUDA cores

- Apply scaling factors for dequantization

Strategic Format Choices

- Uses E4M3 format universally (not hybrid E4M3/E5M2)

- Online quantization instead of delayed quantization

- Special E5M6 format for attention operator inputs

Results

- First successful FP8 training of a model exceeding 600B parameters

- Relative loss error consistently below 0.25% vs BF16

- Enables both faster training and reduced memory usage

4. DualPipe: Revolutionizing Pipeline Parallelism

The Innovation

DualPipe introduces bidirectional pipeline scheduling with sophisticated computation-communication overlap:

Pipeline Efficiency Comparison:

- 1F1B: (P-1)(F+B) bubbles

- ZB1P: (P-1)(F+B-2W) bubbles

- DualPipe: (P/2-1)(F&B+B-3W) bubbles

Key Components

- Bidirectional scheduling: Feeds micro-batches from both pipeline ends

- Component interleaving: Splits chunks into attention, dispatch, MLP, and combine

- Manual SM allocation: Optimizes GPU resources between computation and communication

Communication Optimization

DeepSeek-V3's all-to-all communication leverages network topology:

- Limits each token to 4 nodes maximum

- First transmits via InfiniBand to target nodes

- Then forwards via NVLink to specific expert-hosting GPUs

- Achieves near-zero communication overhead through full overlap

5. Training Efficiency: The Numbers That Matter

Cost Breakdown

- Pre-training: 2.664M H800 GPU hours (14.8T tokens)

- Context extension: 119K GPU hours

- Post-training: 5K GPU hours

- Total: 2.788M GPU hours (~$5.576M at $2/hour)

Efficiency Metrics

- 180K GPU hours per trillion tokens during pre-training

- 2 months total training time on 2048 H800 GPUs

- Zero rollbacks: No irrecoverable loss spikes throughout training

6. Architectural Refinements

Multi-Head Latent Attention (MLA)

- KV compression dimension: 512 (vs 128×128 = 16,384 uncompressed)

- Query compression dimension: 1,536

- Maintains performance while drastically reducing KV cache

DeepSeekMoE Configuration

- 256 routed experts + 1 shared expert per layer

- 8 experts activated per token

- Node-limited routing to maximum 4 nodes

- No token dropping in training or inference

7. Post-Training Innovations

Knowledge Distillation from DeepSeek-R1

DeepSeek-V3 employs sophisticated distillation from reasoning models:

- Expert models generate both original and R1-style responses

- System prompts guide reflection and verification mechanisms

- Rejection sampling ensures high-quality training data

- Balances reasoning capability with response conciseness

Self-Rewarding Mechanism

The model uses itself as a reward source through:

- Constitutional AI approach with voting evaluation

- Dynamic bias toward constitutional directions

- Performance on RewardBench: 87.0% (comparable to GPT-4o and Claude-3.5)

Performance Highlights

Base Model Achievements

- MATH-500: 61.6% (vs LLaMA-3.1 405B: 49.0%)

- HumanEval: 65.2% (vs Qwen2.5 72B: 53.0%)

- MMLU-Pro: 64.4% (vs LLaMA-3.1 405B: 52.8%)

Chat Model Performance

- AIME 2024: 39.2% (vs next best: 23.3%)

- Codeforces: 51.6 percentile (2x better than competitors)

- SWE-Bench Verified: 42.0% resolved

Conclusion

DeepSeek-V3's technical contributions extend beyond incremental improvements—they represent fundamental rethinking of how large language models can be trained efficiently. The auxiliary-loss-free load balancing solves a long-standing MoE training dilemma. The successful FP8 training at 671B parameter scale establishes new boundaries for low-precision training. DualPipe's computation-communication overlap demonstrates that distributed training bottlenecks can be effectively eliminated through careful scheduling.

Perhaps most significantly, the $5.576M training cost proves that frontier model capabilities are achievable without frontier-scale budgets. This has immediate implications for research accessibility and the pace of open-source AI development. The techniques introduced here—particularly the co-design of algorithms, frameworks, and hardware utilization—provide a reproducible blueprint for efficient large-scale training that other teams can build upon.

The convergence of these innovations in a single system demonstrates that the path to more capable AI systems isn't solely through scale, but through engineering precision and algorithmic creativity. As hardware continues to evolve and these techniques mature, we can expect the gap between open and closed-source models to continue narrowing, fundamentally changing the landscape of AI research and deployment.